Internship as NLP Engineer...

Back from a long gap… I enjoyed my Spring Break during crafting last post, but I just realized I still didn’t have an intern after a wonderful road trip (This is how PEER PRESSURE looks like!!!). Ok so, started from March, I put a lot of effort on hunting an intern. Unfortunately, most application process of intern in US had already passed deadline (another reason was: I gave up on finding intern under current suck market…), but fortunately, most summer interns just start recruitment in China, and more fortunately, I successfully got an offer from Baidu as a NLP intern. (Leetcode is the worst, evilest website I have ever seen in the world btw :)

About This Position

- Construction of a Post-hoc Experiment Framework Based on Business Needs

- Development of Automated Experiment Tools & Pipeline and Quantitative Metrics Tools

- Analysis and Validation of Internal Data (Domain Data Sets) from Different Business Perspectives, Exploring Scenario-based Post-hoc Experiment Validation Plans, including but not limited to:

- Support for current priority business initiatives, validating the impact of new external/internal enriched data in business scenarios.

- Accumulation of business experience and development of a general framework for validation within business contexts.

- Establishment of a connection between platform-side experimental results and customer-facing conclusions to provide smarter evaluation solutions (security & permission issues to be discussed).

Objectives:

- Short-term Goal (Urgent Support): Analyze the impact of priority business data across different scenarios.

- Mid-term Goal: Accumulate business experience, develop a general validation framework for business scenarios, and align with PM evaluation standards to collaboratively establish scenario-based evaluation standards and plans.

- Long-term Goal: Establish an automated scenario-based validation process that accommodates diverse user requirements across different scenarios.

- P0 | Post-hoc Experiment Framework: Improve the post-hoc performance evaluation framework from an application perspective, providing reliable business evaluation results.

- P0 | Experiment Validation: Based on the post-hoc evaluation framework, encapsulate business-oriented experiment validation tools to thoroughly and comprehensively verify the impact of core vertical data on business outcomes.

In plain English, what all I needed to do is to train LLM models with domain (business) data and general data, and to analyze models’ performance based on open-source benchmark, and finally create a methodology about experiment and establish an evaluation platform to other colleagues to use.

Outcome

In one word, I did build an evaluation platform! In this platform, user can input an API where the trained LLM deployed, and several benchmark can be selected, such as CEVAL, TruthfulQA etc. Then, the platform would save all results, and user can just click a button to aggregate all required results to generate a report. What the most fancy part is that the platform is highly flexible, which means user can also upload their also structured custom test case to generate report!

However, many features can be improved (left this to who keep working on this lol). For example, user cannot delete the results. Instead, only administrator can delete results on backend. Also, sometimes, if multiple models are running, results would be saved only after all computations are done. Otherwise, if a model is shutdown by accident, even though other models are done their computations, results would be lost…

Some new features are cool but still not be implemented. For example, what if we want to test open-source models? Since the platform is designed for Baidu’s Ernie Bot LLM, open-source model such as ChatGPT cannot be tested by this platform. Furthermore, even though users can aggregate results and gain a quantitative results, can we generate a readable formal report? This can be definitely done. The simplest way is just to prepare a template, and just fill all blanks. Or, may be we can try to embed another LLM (this specific model can analyze data, try some prompt engineering or train a domain model), and generate report.

Whatever, this 3-month intern was fun, and I did learn a lot from it.

What I learned from this internship?

Many tools!

Hadoop - to store large files by distributed machines, and can use MapReduce to further work on data.

Spark - Great replacement for MapReduce to process data based on its RDD concept!

(some inner tools cannot be written.)

Use 16 x 40G GPUs to train large language model - Ernie Bot!

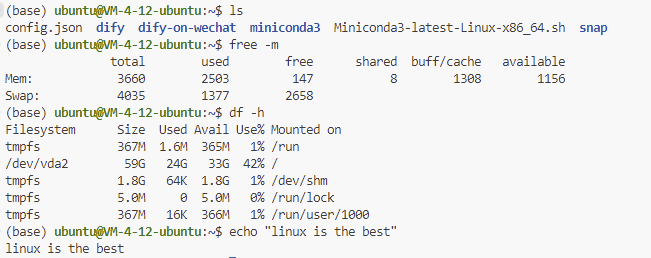

FlaskAPI - to create backend stuff of platform.

Many ideas!

It was my first experience working on a giant company, so when I saw how people worked there, I learned their management mode, working style and so on. Also, I gained some further ideas on LLM’s status quo.

So, yes, a wonderful internship experience!

Personal Review

Even though this internship was great, I still have (a lot of) potential improvement on m,y future work.

When I trained multiple models, I may lost my way because sometimes I may mess the names and models… The results would be seemed ridiculous, so next time I should make sure the model names before training the models. Ensure quality first, then pursue speed.

Making a reasonable plan is necessary. When I was asked to handle multiple things, I felt really confused. So, again, before working, it would be great to list all works should be done today and decide their priority. Having a clear plan and dealing with them one by one/parallelly would help me handle work better.

Also, communication with mentor is important, and this is nothing to explain. Thanks to mentor help me on this position.

Yes, we are here! I did it, and I should get ready on graduation and a real full-time work. Bless me…